WebGL Fire Shader Based on Xflame

Over the holiday, we had the fun idea to put a TV in front of the fireplace we never turn on so we can run fireplace images on it.

Really ties the room together.

It’s been a joy, but it got me thinking about what it would take to put a fire visualizer on there instead of videos of fireplaces. To that end, I’ve dusted off my old knowledge of GLSL and taken the opportunity to start experimenting with making a fire effect with webgl shaders. This took me down a couple of fun paths!

These posts will assume some basic knowledge of how shaders work (that there’s a vertex and fragment shader and you can build a program out of them, and the idea of rendering to texture). There’s some great tutorials out there if you’re just getting started (I recommend Book of Shaders, which I’ve been turning to repeatedly here).

In this journey, we’ll cover:

- Setting up the framework to experiment

- Rendering to texture

- reverse-engineering xflame’s effect

- pseudo-random numbers for cheap directly on the shader

Hello graphics world

WebGL is not my favorite API. It’s based on OpenGL and is very stateful; the advantage is that there’s ample room for optimization by omitting steps you don’t need, the disadvantage is you’re either writing an abstraction on top of it to keep it organized (sacrificing the benefits of the state) or needing to track the details of all that state in your code. But it’s the only thing built into the browser to control the graphics card directly, so let’s saddle up.

I took advantage of WebGL Fundamentals tutorials to get off the ground. They have a good one about both setting up a program and rendering to a texture.

The finished project is here. Feel free to pop it open, view source, and follow along as I highlight some things.

I like how WebGL Fundamentals recommends using script tags with a notjs type to store shader code. It makes it easy to find and use. Here’s the entire vertex shader, as example.

<script id="vertex-shader" type="notjs">

attribute vec4 a_position;

attribute vec2 a_texcoord;

varying vec2 v_texcoord;

void main() {

gl_Position = a_position;

v_texcoord = a_texcoord;

}

</script>

This technique allows you to fetch the text of the shader program as document.querySelector("#vertex-shader").text.

Most of the actual JavaScript sets up the shader program, a texture buffer for writing to, and the canvas to output the final image to. A couple things worth noting in this setup:

We initialize the texture buffer representing the last frame of fire effect to all black, except a random scattering of pixels between 0 and 100% red intensity on the bottom. That’ll be important for the visual feedback loop the Xflame algorithm uses.

// Set up a texture we render to representing previous state of the fire.

const lastFrame = gl.createTexture();

gl.bindTexture(gl.TEXTURE_2D, lastFrame);

const pixelBuffer = new Uint8Array(TEXTURE_BUFFER_SIZE * TEXTURE_BUFFER_SIZE * 4);

// ... and initialize the past frame to black.

for (let i = 0; i < TEXTURE_BUFFER_SIZE; i++) {

for (let j = 0; j < TEXTURE_BUFFER_SIZE; j++) {

pixelBuffer[TEXTURE_BUFFER_SIZE*4*i+4*j] = 0;

pixelBuffer[TEXTURE_BUFFER_SIZE*4*i+4*j+1] = 0;

pixelBuffer[TEXTURE_BUFFER_SIZE*4*i+4*j+2] = 0;

pixelBuffer[TEXTURE_BUFFER_SIZE*4*i+4*j+3] = 255;

}

}

// ... except along the bottom row. For the bottom row, we're going to pick some

// random initial colors from black to full-red.

for (let i = 0; i < TEXTURE_BUFFER_SIZE; i++) {

pixelBuffer[4*i] = Math.floor((Math.random() * 255));

pixelBuffer[4*i+1] = 0;

pixelBuffer[4*i+2] = 0;

pixelBuffer[4*i+3] = 255;

}

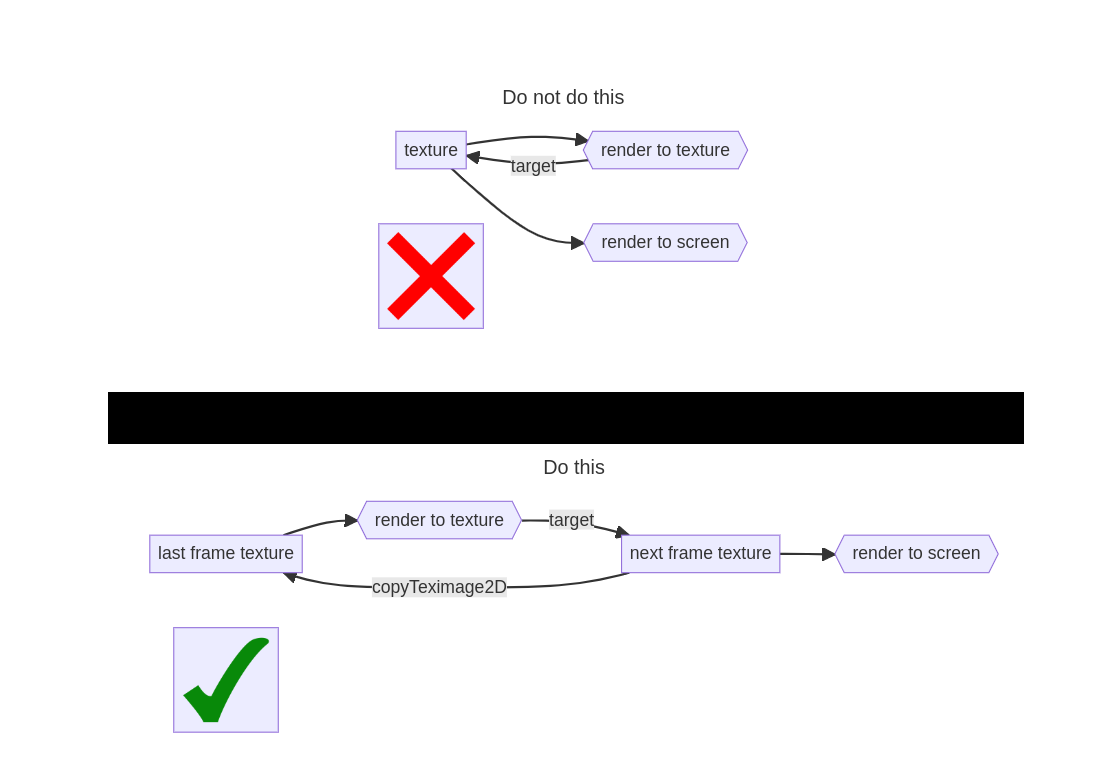

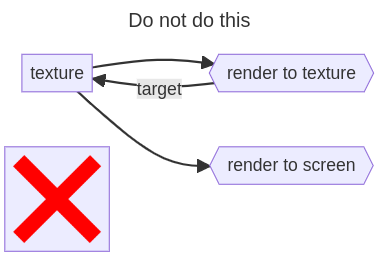

We also have to initialize two textures: one we can use as the source for holding the previous frame (lastFrame), and one we can write to and use to bulid the next frame (nextFrame). We must do this because WebGL doesn’t generally consider it okay to read from a texture at the same time you’re writing to that texture via a framebuffer; Chrome, in particular, will fire off a GL_INVALID_OPERATION: Feedback loop formed between Framebuffer and active Texture alert and give garbage graphical output. So instead, we write to one texture using the second texture as lookup, use copyTexImage2D to move that output’s content back to the second texture, and then use the first texture to write to the screen.

Illustration of the process to use. Note that this isn’t really optimal; you could also alternate the two textures back-and-forth so that one acts as lastFrame and one as nextFrame every other frame. But this is fine for demonstration purposes.

function render() {

gl.drawArrays(gl.TRIANGLE_FAN,

0, // starting offset

4, // buffer length

);

}

function drawScene(time) {

// Set up our program and inputs

gl.useProgram(program);

// - set up a new random seed for the shader this frame

gl.uniform1f(seedLocation, Math.random());

// - this is the distance in texture-space coordinates from one pixel of the texture to the next

gl.uniform1f(cellStepLocation, 1 / TEXTURE_BUFFER_SIZE);

// activate and bind a data source for position coordinates

gl.enableVertexAttribArray(positionLocation);

gl.bindBuffer(gl.ARRAY_BUFFER, positionBuffer);

gl.vertexAttribPointer(

positionLocation,

2, // 2 coordinates

gl.FLOAT, // ... of type float

false, // don't normalize the data

0, // stride through array

0, // offset into array

);

// activate and bind a data source for the texture coordinates of the vertices

gl.enableVertexAttribArray(texcoordLocation);

gl.bindBuffer(gl.ARRAY_BUFFER, texcoordBuffer);

gl.vertexAttribPointer(

texcoordLocation,

2, gl.FLOAT, false, 0, 0);

gl.uniform1i(textureLocation, 0);

// render to texture from lastFrmae into nextFrame's framebuffer

gl.bindFramebuffer(gl.FRAMEBUFFER, fb);

gl.bindTexture(gl.TEXTURE_2D, lastFrame);

gl.viewport(0, 0, TEXTURE_BUFFER_SIZE, TEXTURE_BUFFER_SIZE);

render();

// copy our nextFrame to our lastFrame (this works because the framebuffer is still bound

// and the active texture is the one we bound lastFrame to).

gl.copyTexImage2D(gl.TEXTURE_2D, 0, gl.RGBA, 0, 0, TEXTURE_BUFFER_SIZE, TEXTURE_BUFFER_SIZE, 0);

// render to scene from nextFrame

gl.bindFramebuffer(gl.FRAMEBUFFER, null);

gl.bindTexture(gl.TEXTURE_2D, nextFrame);

gl.viewport(0,0,gl.canvas.width, gl.canvas.height);

render();

// ... and of course, we'll want to re-render as soon as the browser wants more animation.

requestAnimationFrame(drawScene);

}

To clarify, here is the loop for drawing the scene. Note that we delegate to a (very simple) render function that just renders a triangle fan taking up the whole canvas. The interesting part is where we set up the program, configure our shader and framebuffer, render, and then unbind the framebuffer and bind in the nextFrame texture to render (finally) to the canvas.

That’s all fine enough, but it’s ultimately boring if we don’t actually draw some fire.

Reverse-engineering Xflame’s effect

I’ve been a fan of the “Xflame” screensaver (originally by “The RasterMan (Carsten Haitzler) and others) for awhile, and I figured it’d be a good starting point for playing with fire effects. I fished around for the source a bit and found a port to SDL by Sam Lantinga. This seemed as good a place as any to work from. Looking over the code a bit, I found the core render function that stepped a frame forward:

// from xflame.c, copyright 1996 Carsten Haitzler, distributed as Freeware

// ("You may copy it, modify it or do with it as you please, but you may not claim

// copyright on any code wholly or partly based on this code. I accept no

// responisbility for any consequences of using this code, be they proper or

// otherwise.")

void

XFProcessFlame(int *f, int w, int ws, int h, int *ff)

{

/*This function processes entire flame array */

int x,y,*ptr,*p,tmp,val;

for (y=(h-1);y>=2;y--)

{

for (x=1;x<(w-1);x++)

{

ptr=f+(y<<ws)+x;

val=(int)*ptr;

if (val>MAX) *ptr=(int)MAX;

val=(int)*ptr;

if (val>0)

{

tmp=(val*VSPREAD)>>8;

p=ptr-(2<<ws);

*p=*p+(tmp>>1);

p=ptr-(1<<ws);

*p=*p+tmp;

tmp=(val*HSPREAD)>>8;

p=ptr-(1<<ws)-1;

*p=*p+tmp;

p=ptr-(1<<ws)+1;

*p=*p+tmp;

p=ptr-1;

*p=*p+(tmp>>1);

p=ptr+1;

*p=*p+(tmp>>1);

p=ff+(y<<ws)+x;

*p=val;

if (y<(h-1)) *ptr=(val*RESIDUAL)>>8;

}

}

}

}

Exciting! It’s been a little while since I’ve done some reverse-engineering!

I started by looking through the rest of the code (call sites for this function in particular) to understand what the input arguments meant. I was able to piece together:

- f pointer: pointer to the flame array

- w: width of the flame buffer

- h: height of the flame buffer

- ws: “power of flame width,” i.e. what power-of-2 the flame is within (i.e. if w is 255, ws is 8). I bet it means “w-scale”

- ff pointer: pointer to separate flame array. It turns out this is redundant (it ends up being a copy of f) and is probably a separate memory buffer from f so that the renderer can hand it off to a drawing function in a parallel thread and trust it won’t be corrupted by a second pass at updating the buffer while it’s actually drawing.

I then grabbed the constant values and made a guess at what they meant…

- MAX: 300, maximum value of the cell

- VSPREAD: 78, how much energy in a cell transfers vertically

- HSPREAD: 26, how much energy in a cell transfers horizontally

Armed with this knowledge, I went line-by-line through the algorithm and translated it to pseudo-code to wrap my brain around it.

- stepping backwards across all the height (except row 1), and all the width

(except first and last column)

- center ptr on y raised to width-power + x

- clamp value to MAX to prevent runaway heat

- if the heat value for this cell > 0:

- The v update

- set tmp to heat in this cell * VSPREAD (78) / 256

- grab ptr to cell -2 rows from where we are

- add to that cell tmp / 2

- grab ptr to cell -1 rows from where we are

- add to that cell tmp [Ah, so further node gets half the decayed energy, this node gets full decayed energy]

- The h update

- set tmp to heat in this cell * HSPREAD (26) / 256

- get ptr to cell -1 row and -1 column from where we are, add tmp

- get ptr to cell -1 row and +1 column from where we are, add tmp

- get ptr to cell -1 column from where we are, add tmp / 2

- get ptr to cell +1 column from where we are, add tmp / 2

- set same cell in flame2 to heat in this cell [OH, flame2 is just a render buffer! We pass it to the render functions, probably so we can thread generating the next frame and rendering the current one]

- Decay the heat in the cell for all cells that aren’t the top cell, set cell value to value * RESIDUAL (68) / 256

- The v update

… ah! This is basically a convolution. I’ve written code like this in the past; it’s broadly based on the conduction principle “The heat in a given unit of matter will diffuse into all the matter next to it.” Only in this case, we want to simulate the fire flowing upwards so the diffusion kernel looks like this:

| vdecay / 2 | ||

| hdecay | vdecay | hdecay |

| hdecay / 2 | SOURCE | hdecay / 2 |

All well and good, except that in GLSL shaders, we act on each fragment and decide what color it should be. So instead of stepping through each cell of the fire texture and diffusing outwards, we’ll collect inwards for each pixel of the frame buffer.

Here’s the “flipped around” kernel we can use for our fragment shader.

| hdecay / 2 | TARGET | hdecay / 2 |

| hdecay | vdecay | hdecay |

| vdecay / 2 |

After we collect the values from each cell and sum them, we multiply the whole thing by a “celldecay” value (to simulate some general loss of thermal energy to entropy; the higher the decay, the quicker the fire disappears).

Here’s the resulting logic.

void main() {

float cellStep = u_cellStep;

// I played around with all these constants to get a look I was happy with

// - a general "fudge factor" that has the effect of weakening the decay effects

const float fudge = 1.03;

// - each of the decay constants is "how much do I multiply that cell by before summing it in?"

const float hDecay = 0.1015625 / fudge;

const float hDecay2 = hDecay / 2.0;

const float vDecay = 0.3046875 / fudge;

const float vDecay2 = vDecay / 2.0;

const float cellDecay = 0.265625 / fudge;

const float fireVariance = 0.25;

if (v_texcoord.y <= cellStep) {

// for the bottom row of texels, we want to "flicker them": brighten or dim them a random amount.

float distort;

prand(u_seed, v_texcoord.x, distort);

float cellValue = texture2D(u_texture, v_texcoord).x + fireVariance * (distort - 0.5);

vec4 color;

valueToColor(cellValue, color);

gl_FragColor = color;

} else {

// Here is the convolution logic based on Xflame

float cellValue = texture2D(u_texture, v_texcoord).x * cellDecay;

cellValue += hDecay2 * texture2D(u_texture, v_texcoord + vec2(-cellStep, 0)).x;

cellValue += hDecay2 * texture2D(u_texture, v_texcoord + vec2(cellStep, 0)).x;

cellValue += hDecay * texture2D(u_texture, v_texcoord + vec2(-cellStep, -cellStep)).x;

cellValue += hDecay * texture2D(u_texture, v_texcoord + vec2(cellStep, -cellStep)).x;

cellValue += vDecay * texture2D(u_texture, v_texcoord + vec2(0, -cellStep)).x;

cellValue += vDecay2 * texture2D(u_texture, v_texcoord + vec2(0, -cellStep * 2.0)).x;

vec4 color;

valueToColor(cellValue, color);

gl_FragColor = color;

}

We’re almost done, but you’ll notice one interesting function in there: prand. What’s up with that?

Random numbers on the cheap for graphics

The Book of Shaders has a great tutorial on getting (pseudo-)random numbers for graphical purposes. The key idea here is they don’t have to be cryptographically secure, they just have to look noisy enough to a human eye. To that end, blowing up the amplitude of a sine wave and then taking the fractional component of the value gives you a jagged sawtooth where the distance between peaks varies sinusoidally; if you blow it up far enough, the result starts to trip over the gaps in floating-point representation and you get a function where for any given floating-point input, the input of the next sequential floating-point number is hard for your brain to predict. Throw a seed on there to shift the input phase and were pretty happy.

I’m almost embarrassed at how simple this generator is!

// Get a pseudorandom value between 0 and 1 from a seed and a lookup

// See https://thebookofshaders.com/10/

void prand(in float seed, in float lookup, out float result) {

result = fract(sin(seed + lookup) * 10000.0);

}

We treat the bottom row of texels in the texture we’re rendering to as “embers” that feed the fire; on every frame of animation, we pick a random number for each ember and grow or shrink the ember’s intensity by a random number between [-0.5,0.5).

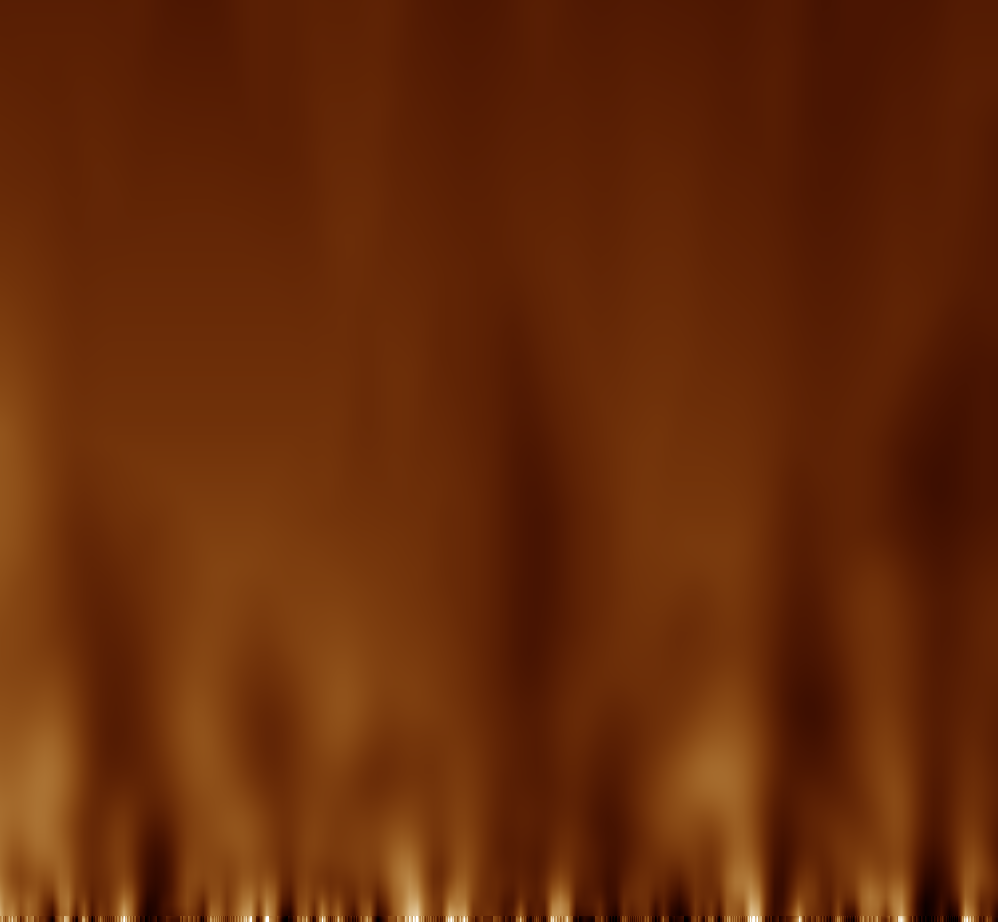

And that’s it! You can see the shader live here.

Limitations / drawbacks

While this effect is a good starting point, it has some limitations I’m looking into overcoming:

- The fire is a bit “whispy.” I think I can address that by playing with my numbers some more, as well as the color palette of the resulting effect

- Since the fire spreads as the convolution of a kernel, it has a maximum diffusion rate of one step diagonally per frame (since the kernel only looks that far).

- The need to render-to-texture and then apply that texture on the next frame is inefficient and consumes more memory than I’d like (ideally, it’d be cool if we could omit the two-pass render).

- Real fire has vortices and other collective effects that make it flow more wickedly than this simple upward motion; this motion looks more like a set of blowtorches than a crackling wood fire. It’s been recommended to me that I look into applying “curl noise” to get those effects.

… but, while researching curl noise, I stumbled across an entirely different approach using “fractional Brownian motion” (a.k.a. “fractal Brownian motion”). We’ll talk about that next time.

Comments