Playing with AprilTags: Python Recognizer

An announcement by FIRST Robotics earlier this year revealed an interesting bit of news: next year’s kit would include AprilTags as vision targets. I’m completely unfamiliar with these, so I figured it’d be fun to dive in and see what they’re all about.

Generating some tags

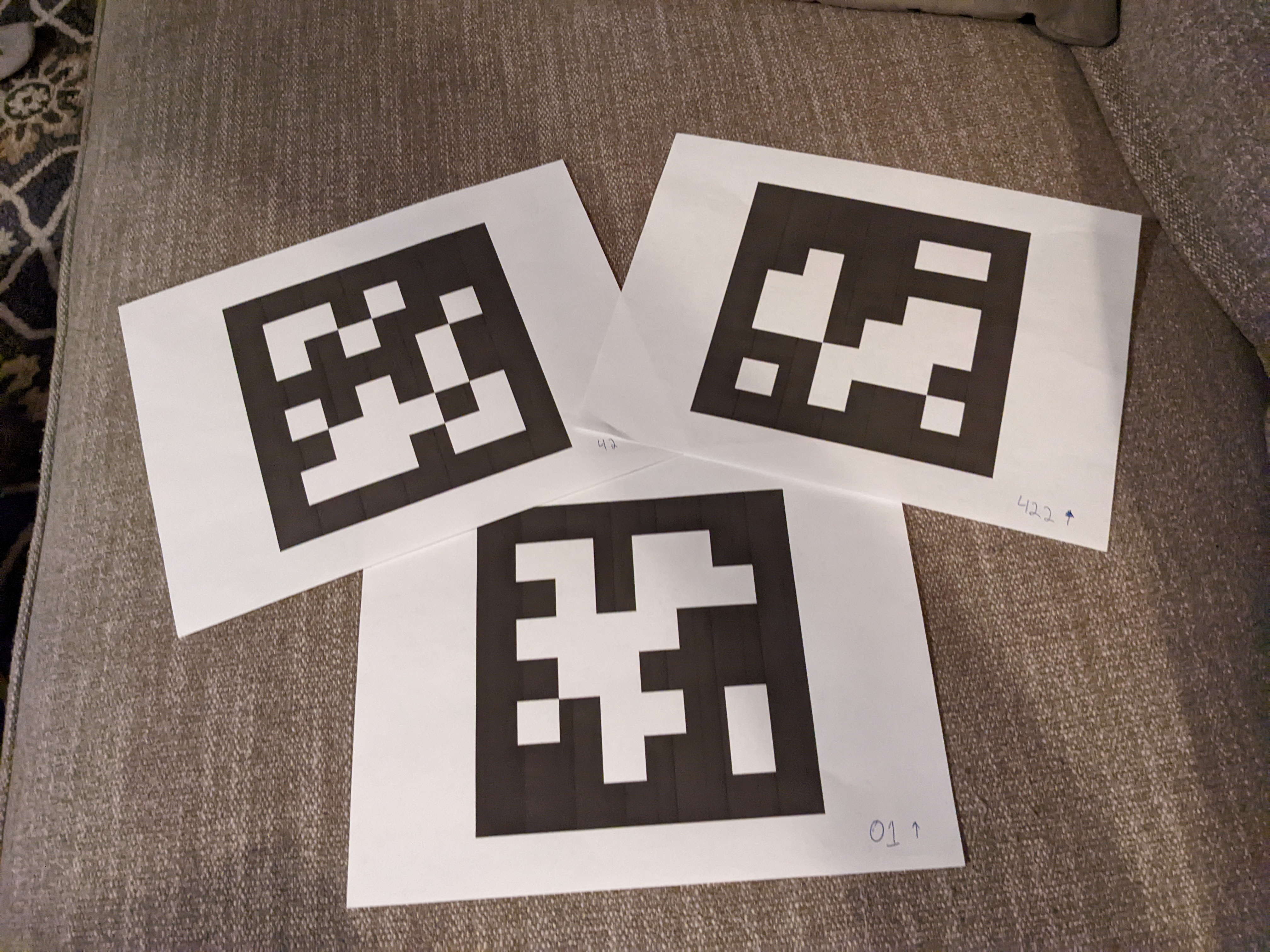

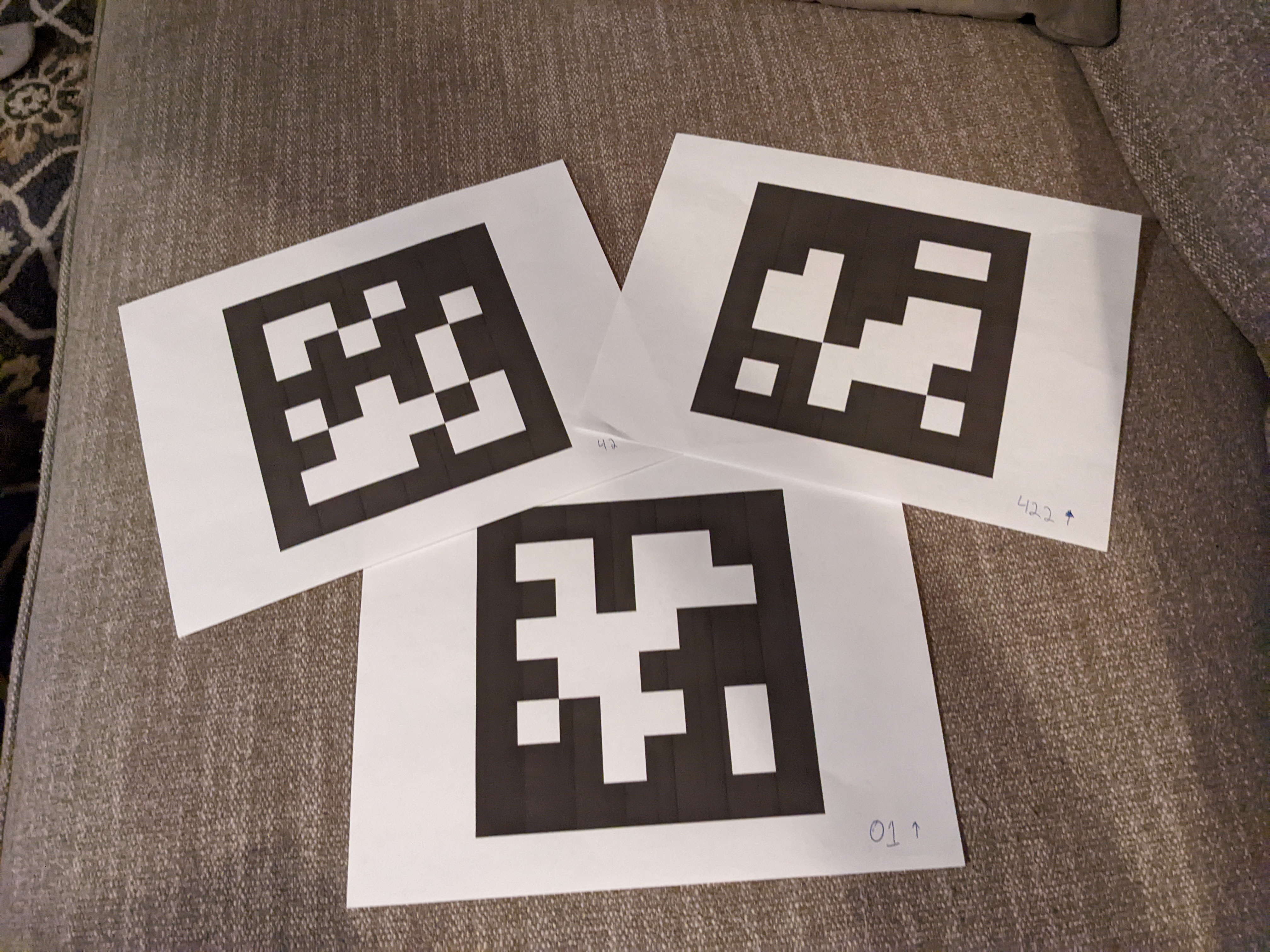

AprilTags are a so-called “fiducial” system that identifies not only a position and orientation, but an identifier code as well via a block encoding scheme, conceptually similar to a barcode but with some design features useful to making them easy to comprehend from multiple angles. FRC is planning to use the 36h11, so I grabbed a few samples from the repository. They’re stored in minimized “one pixel per block” format, so to make them tractable I blew them up and bordered them with ImageMagick (convert tag36_11_00001.png -scale 10000% -bordercolor white -border 200 tag36_11_00001-large.png) and ran them out on the printer. I grabbed a few reference numbers to play with: 1, 42, and 422.

This’ll get us started

Recognizing the tags

I don’t have access to the beta for the AprilTag integration into the FRC codebase yet, but I was able to get started by playing around with recognition via OpenCV and a Python library. Setup was pretty straightforward:

sudo apt-get install python3

sudo apt-get install pip

pip install opencv-python

pip install apriltag

(Note: at least one of those required an apt-get install of one more dependency for making the “wheel,” but I can’t remember which one. Fortunately, pip was pretty good about clearly stating what dependency was missing).

Once that was done, I was able to bash together a pretty simple Python script to do the following:

- Fetch the camera feed

- Grayscale it with OpenCV

- Feed the grayscale into the apriltag recognizer

- If a tag was found, scribble some info on the camera feed to make it easy to visualize the recognized data

- Render the updated (color) camera feed

Code as below.

# Copyright 2022 Mark T. Tomczak

#

# Permission is hereby granted, free of charge, to any person obtaining a copy of

# this software and associated documentation files (the "Software"), to deal in

# the Software without restriction, including without limitation the rights to

# use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of

# the Software, and to permit persons to whom the Software is furnished to do so,

# subject to the following conditions:

#

# The above copyright notice and this permission notice shall be included in all

# copies or substantial portions of the Software.

#

# THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

# IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS

# FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR

# COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER

# IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN

# CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

import cv2

import apriltag

LINE_LENGTH = 5

CENTER_COLOR = (0, 255, 0)

CORNER_COLOR = (255, 0, 255)

### Some utility functions to simplify drawing on the camera feed

# draw a crosshair

def plotPoint(image, center, color):

center = (int(center[0]), int(center[1]))

image = cv2.line(image,

(center[0] - LINE_LENGTH, center[1]),

(center[0] + LINE_LENGTH, center[1]),

color,

3)

image = cv2.line(image,

(center[0], center[1] - LINE_LENGTH),

(center[0], center[1] + LINE_LENGTH),

color,

3)

return image

# plot a little text

def plotText(image, center, color, text):

center = (int(center[0]) + 4, int(center[1]) - 4)

return cv2.putText(image, str(text), center, cv2.FONT_HERSHEY_SIMPLEX,

1, color, 3)

# setup and the main loop

detector = apriltag.Detector()

cam = cv2.VideoCapture(0)

looping = True

while looping:

result, image = cam.read()

grayimg = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

# look for tags

detections = detector.detect(grayimg)

if not detections:

print("Nothing")

else:

# found some tags, report them and update the camera image

for detect in detections:

print("tag_id: %s, center: %s" % (detect.tag_id, detect.center))

image = plotPoint(image, detect.center, CENTER_COLOR)

image = plotText(image, detect.center, CENTER_COLOR, detect.tag_id)

for corner in detect.corners:

image = plotPoint(image, corner, CORNER_COLOR)

# refresh the camera image

cv2.imshow('Result', image)

# let the system event loop do its thing

key = cv2.waitKey(100)

# terminate the loop if the 'Return' key his hit

if key == 13:

looping = False

# loop over; clean up and dump the last updated frame for convenience of debugging

cv2.destroyAllWindows()

cv2.imwrite("final.png", image)

I gave it a whirl and it basically works!

Detection weaknesses

While detection was fairly consistent, there were some notable errors relative to detecting retroreflective tape:

- The entire tag needs to be in frame. A partial tag very quickly fails to recognize, as does a tag with anything obscuring it.

- Recognizing the tag seems very sensitive to motion blur. If I move at more than 1.5 camera-widths a second, recognition breaks down. This could be a real concern in a competition environment where robots move fast and are frequently jostled.

I’ve only scratched the surface, so it’s unclear whether there are configuration options I could tune on the AprilTag library to clean up these issues. Even with the issues, the tags are fun to play with and I’m looking forward to seeing them in the 2023 FIRST competition!

Comments